INSTRUMENTATION

INSTRUMENTATION

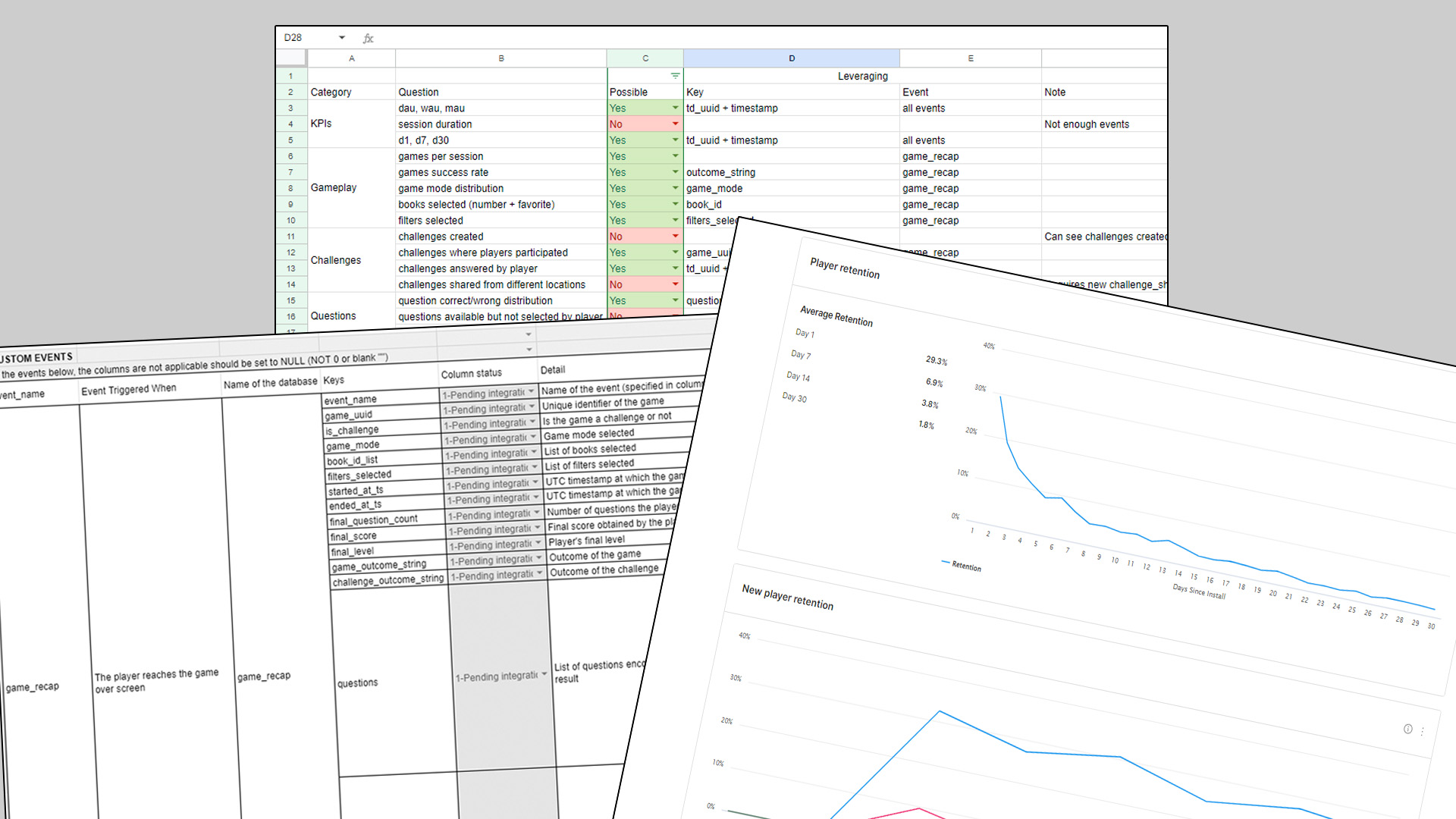

KPI DEFINITION

Business first, data after

The initial and most critical step in any successful data collection process is identifying the specific questions that your data must address. This involves carefully defining your key performance indicators (KPIs) from the outset, ensuring that each piece of data collected is purposeful and directly relevant to your objectives.

By strategically developing these indicators, you can focus your efforts on securing precisely the data you need for your product’s success, while avoiding the accumulation of unnecessary or irrelevant information in your database. Expanding beyond mere collection, this approach facilitates the creation of a streamlined and efficient data ecosystem, enhancing the overall quality and utility of the information gathered. This method not only optimizes data accuracy and relevance but also significantly enhances decision-making processes, leading to more informed strategies and better outcomes for your product

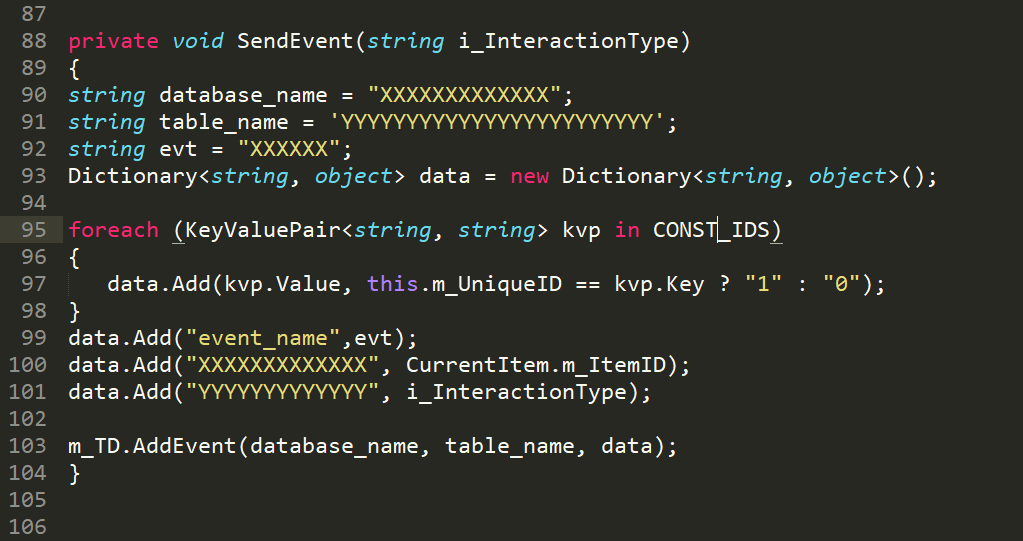

EVENT STRUCTURING

For all types of data streams

Custom event structuring is essential for developing a robust, clean dataset. By integrating custom events with your key performance indicators (KPIs) or product design, the event map ensures that each recorded event is not only useful but also versatile enough to address multiple queries. This structured approach guarantees that the data is captured in a format that is straightforward and accessible for developers to implement and interpret. This method enhances the utility of your data, making it a powerful tool for informed decision-making and strategic planning.

EVENT VALIDATION

Accountable data

Through meticulous 1:1 reviews of the events collected and hands-on walkthroughs of your product, we ensure that every piece of data gathered is entirely useful and accurate. Quality data begins at the source, and leveraging our extensive experience in quality assurance, we help identify and address all potential edge cases and issues. This proactive approach guarantees that problems are resolved before transitioning to production, ensuring the reliability and integrity of your data. With our expert oversight, you can trust that your data is not just collected but curated to drive meaningful insights and decisions.

INTEGRATION SUPPORT

We’ve seen it all

Drawing on our extensive expertise in analytics integration, we offer comprehensive support to all our clients, ensuring they have direct access to the assistance they need. Our services range from straightforward integrations of analytics SDKs to handling more intricate scenarios involving custom metrics.

We dedicate the necessary time to meticulously set up and verify each integration, guaranteeing that you have all the tools required to begin collecting high-quality, actionable data. By focusing on precision and clarity from the outset, we help you establish a solid foundation for data-driven decision-making, ensuring your analytics are not just implemented, but optimized to deliver valuable insights tailored to your specific business needs.

PROCESSING

PROCESSING

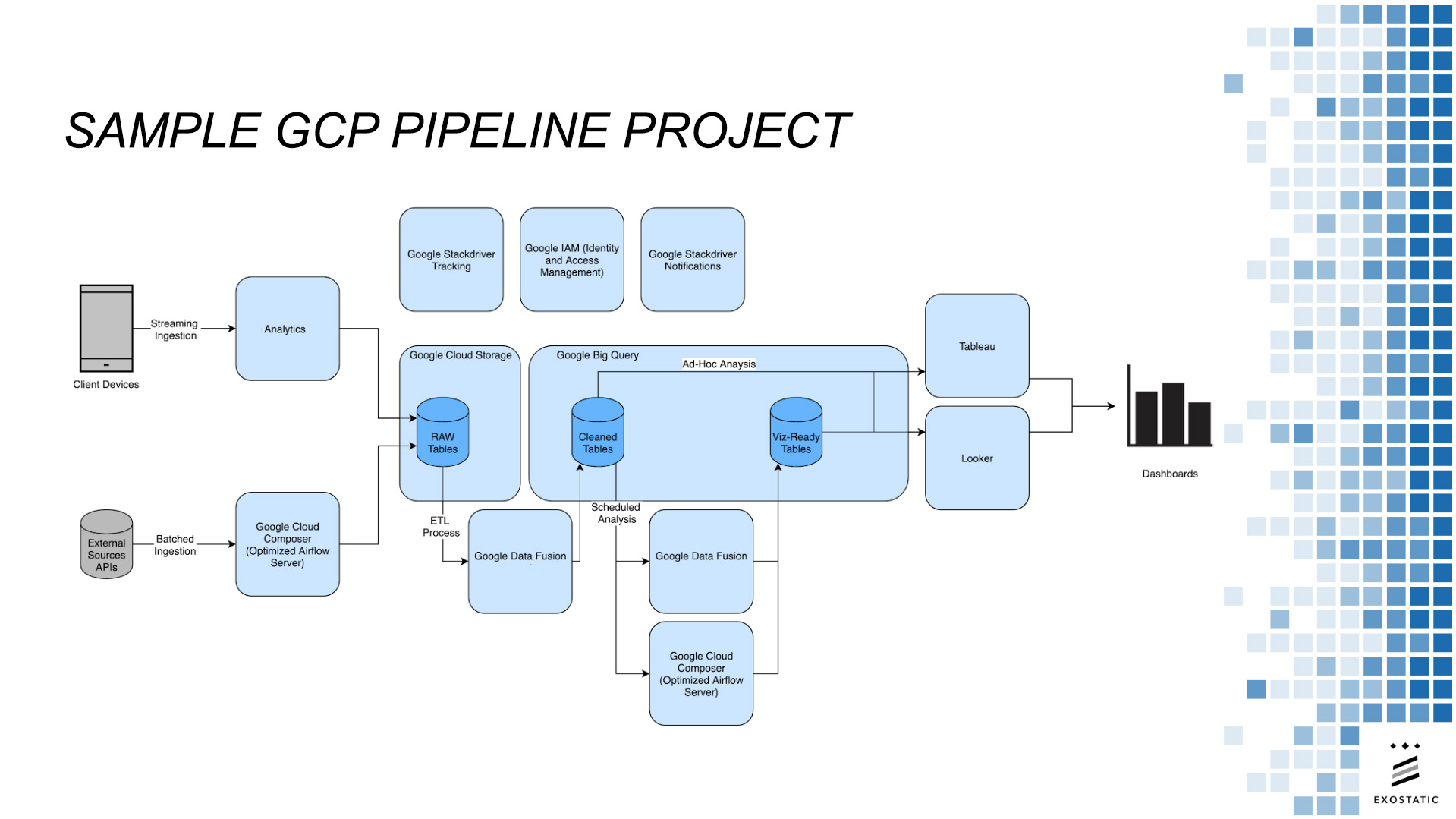

DATA GATHERING

Getting your data wherever it is, whatever format it’s in

We interface with the data platforms you are already using and build extraction scripts to retrieve your data. Once it is extracted we confirm their validity and reprocess all of it into a single data format. We then centralize all the data sources in a single place so it’s ready to be aggregated, analyzed and exported for visualization.

PIPELINE AUTOMATION

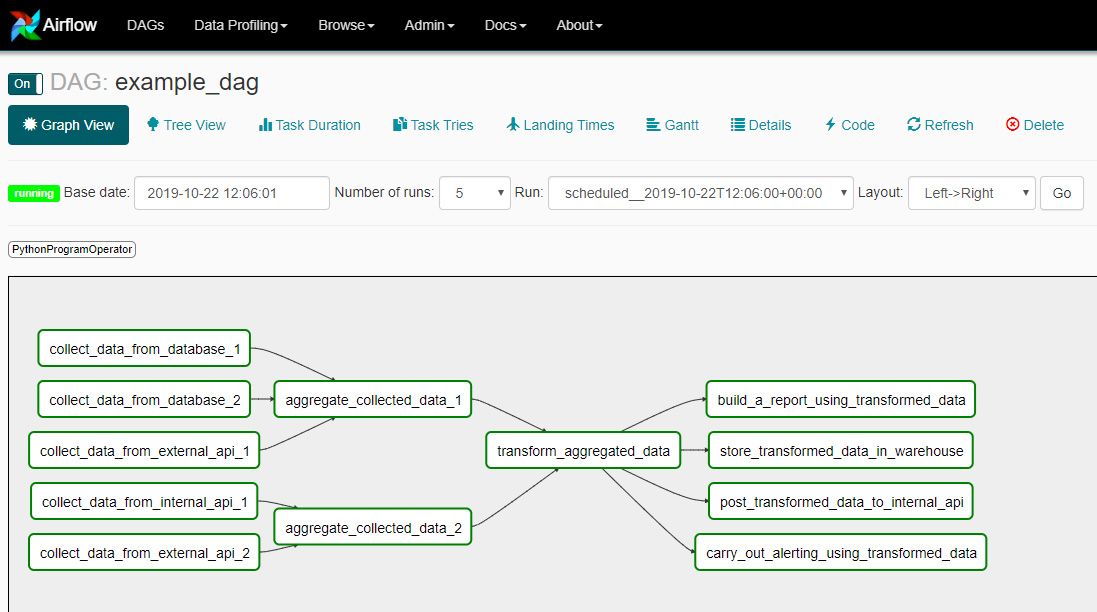

Limiting manual interventions

We define with you the export frequency of the multiple data sources and the refresh rates of the dashboards. A pipeline is then designed based on those requirements and all the processes, queries and scripts are scheduled to minimize the calculation times. We also make sure to keep the pipeline design flexible enough to quickly integrate a new data source or product according to the evolution of your business.

DATA TRANSFORMATION

Raw data are useless if not properly transformed

Once the data is imported, we apply a series of filters and processes to clean it. We prepare metadata and lookup tables to join relevant tables and data sources together. We then aggregate the data to whatever level is required to answer specific questions.

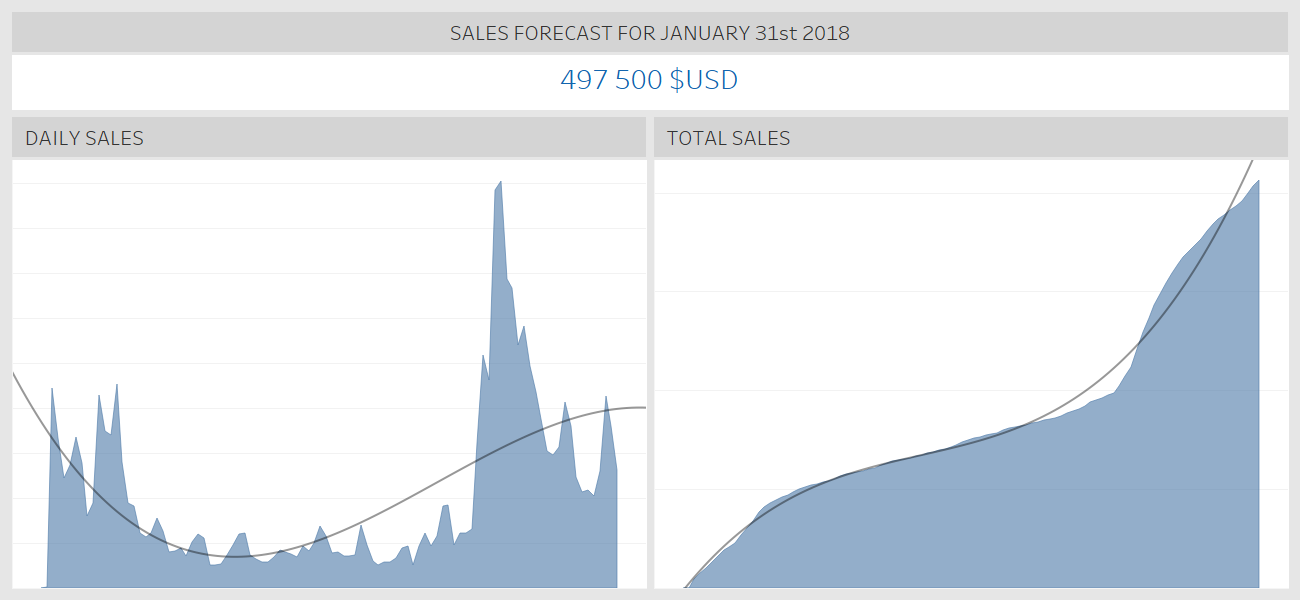

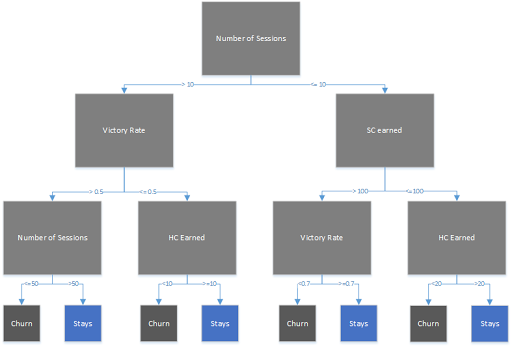

MACHINE LEARNING

When A.I. outperforms humans

Depending of the use cases, we can apply a wide variety of Machine Learning Models to your data to extract insights. Predictive models can be used to model time series or predict user churn. Classification models will differentiate your users into meaningful groups, Sentiment Analysis can analyze users’ opinions and reviews to find improvement points.

REPORTING

REPORTING

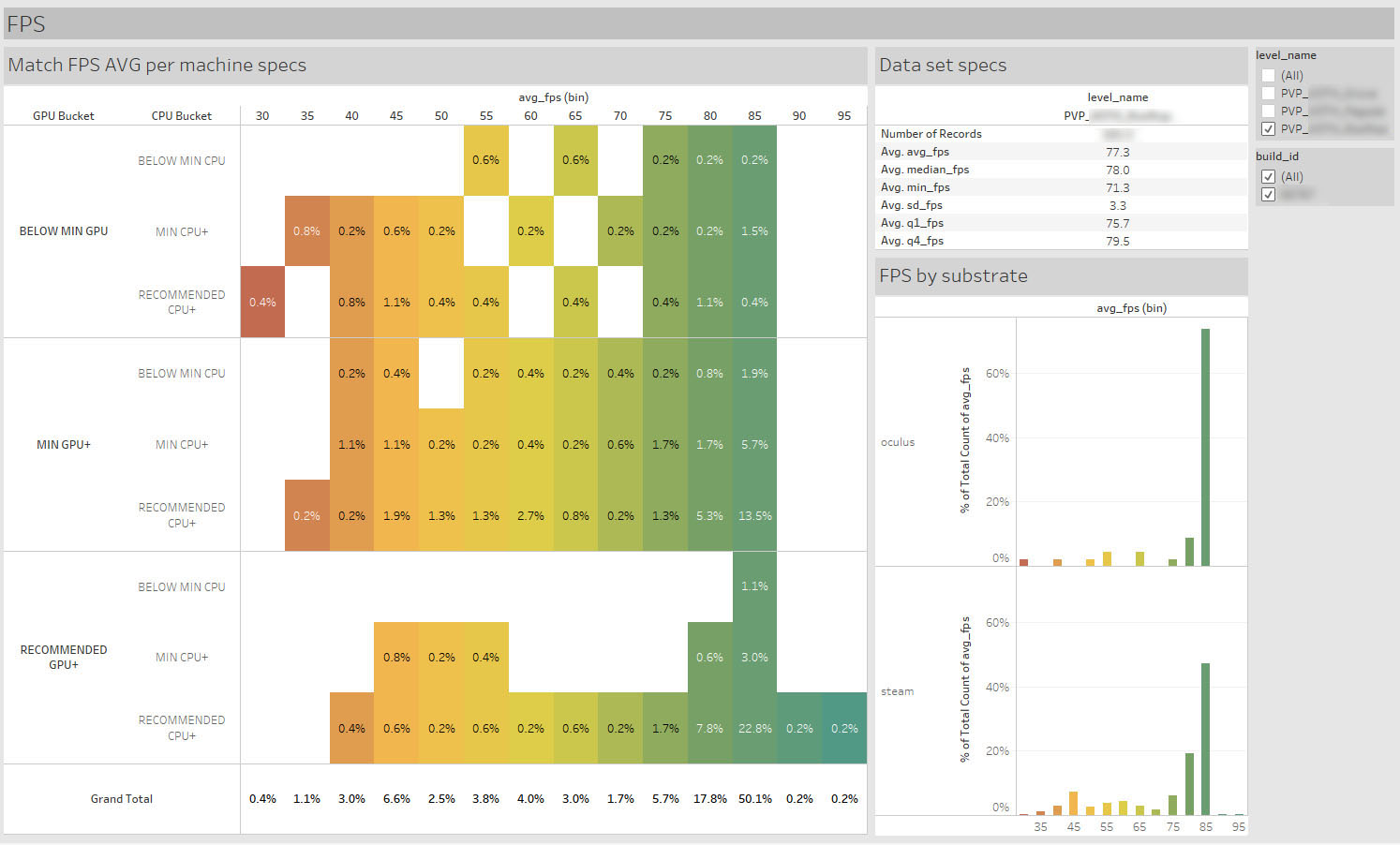

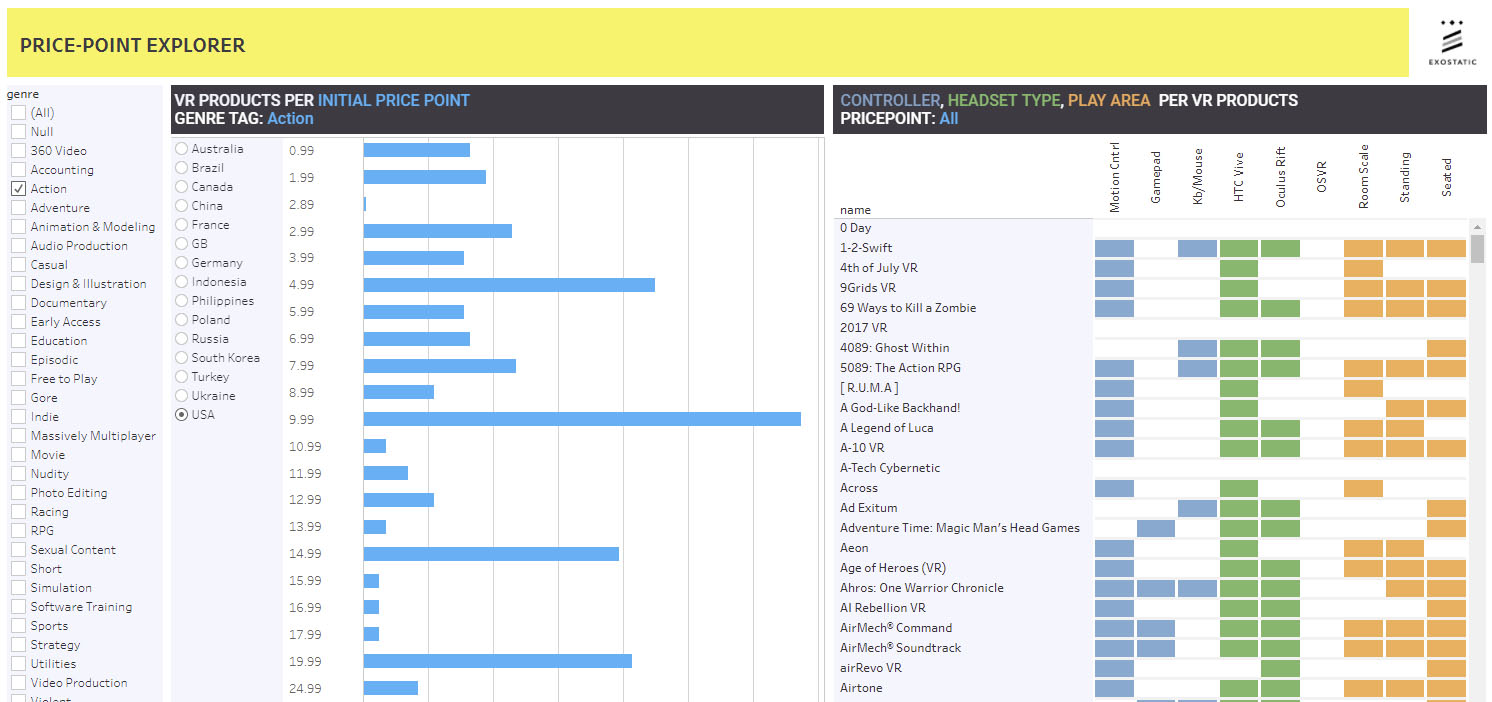

DESCRIPTIVE ANALYSIS

Understanding what happened

Businesses collect impressive amounts of data from numerous sources. In its initial form, raw data doesn’t offer much value without the help of data experts. At Exostatic, we summarize your data into a clear and understandable format to simplify further analysis. Our dashboards allow you to mine into your data to extract the pieces of meaningful information. We also aggregate large amount of data to show trends

QA & INTEGRITY

QA & INTEGRITY

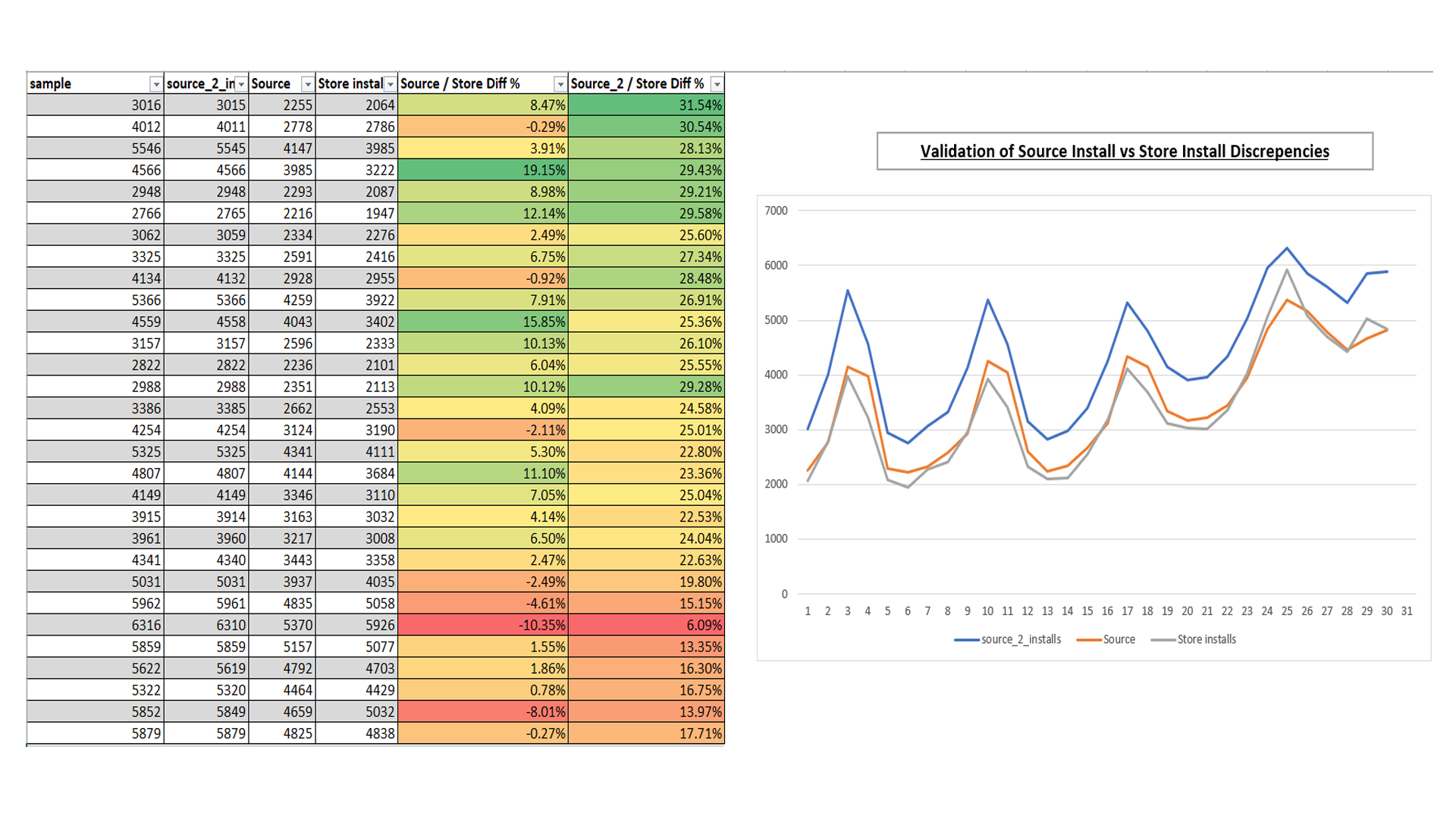

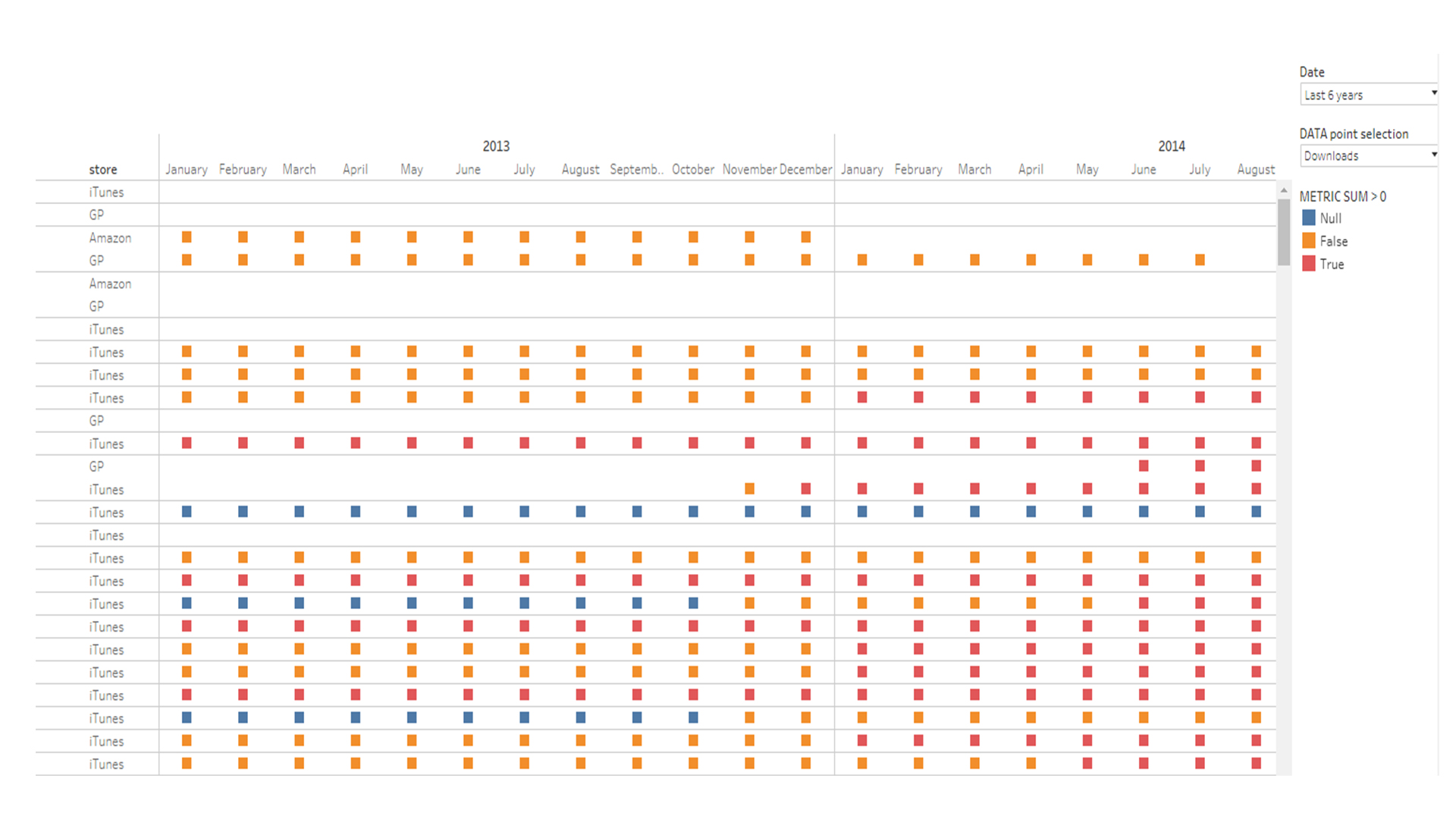

DATA QA

Expert Level Quality Assurance

With years of experience in quality assurance, we track down even the most obscure edge cases and offer recommendations to guarantee correct tracking in these cases. Using simple business rules, mathematical models or M.L. models, we can setup processes to warn business users when there is abnormal or poor quality data in their data.

QA Reporting

Clear, extensive & comprehensive

Data bugs always exist and are influenced by various factors from development experience to complexity of the project or scope of the datasource. By providing detailed testing results, the team can focus efforts and prioritize fixes based on their impact to data.